Social Web to Royal Machine

Metaphors for LLMs and meatspace as anti-environments

This is the fourth installment in a series on Archival Time, where I investigate the new cultural temporality introduced by LLMs.

Previously, In Archival Time, I wrote about the carnivalesque nature of internet vs archival slice nature of LLMs.

In Prophetic Soft Technologies, I wrote about prediction markets as response to loss of narrative.

In Cozyweb Animals, I characterized cozyweb communities as a medium for self regulation during permacrisis

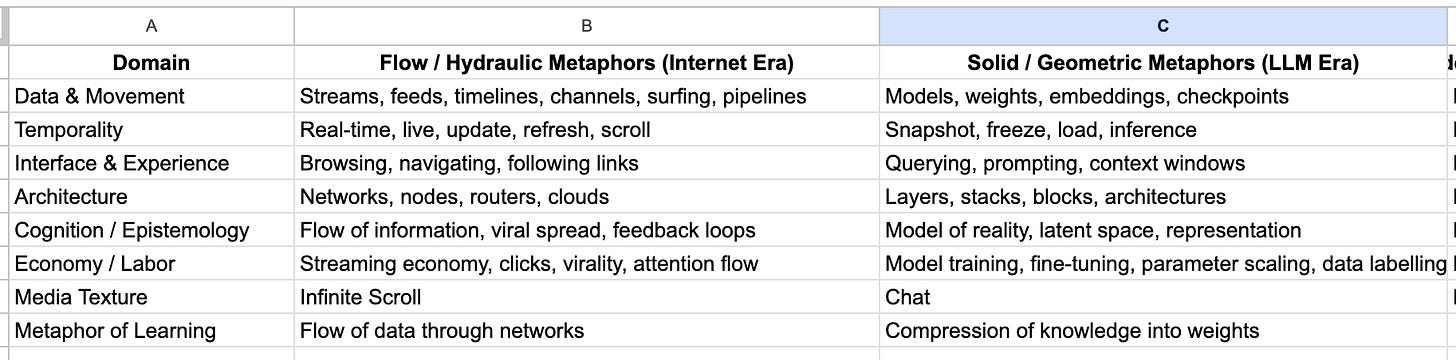

The Internet era, beginning with early network computing, has been dominated by hydraulic metaphors. We browse timelines, view streams, data pipelines flow and connect. LLMs by contrast crystalize streams and introduce solid metaphors such as models, model weights, context windows etc. If the internet metaphors were flow and linear oriented, the LLM metaphors are spatial and plane oriented.

***

The opposition between hydraulic and solid metaphors for technological paradigms has repeated throughout history. French philosophers Gilles Deleuze and Guattari wrote about this extensively in A Thousand Plateaus in the section titled Treatise on Nomadology. The easiest way to distill their dense philosophical reasoning would be through an example that contrasted the hydraulic and solid metaphors: Gothic churches and Romanesque buildings.

Deleuze and Guattari point to the Gothic masons, whose cathedrals were built through operative geometry—a dynamic understanding of material and force. In Gothic architecture, unlike the earlier Romanesque style, the shape of the vault was not decided in advance as a perfect geometric form drawn on a plan. Instead, it emerged from the interplay of forces—how each stone’s cut distributed pressure and thrust to the next. Each curve, angle, and weight was responsive to the behavior of its neighbors. The vault, in other words, was not the execution of a single blueprint but the result of continuous adjustments, variations felt and measured on-site. This “minor” science of stonecutting was projective and empirical, guided by the behavior of matter itself rather than by pre-given equations.

According to Deleuze, royal, or state science, does not erase the discoveries of nomad science, instead it appropriates and formalizes them. The techniques of the Gothic masons became the foundation for what would later be codified as statics, mechanics, and architecture proper. What had been a moving, adaptive system was recast as a state machine: a model of forces rendered stable through rules, coordinates, and equations.

A clearer, easy to understand example comes from navigation. For centuries, sailors found their way by reading the wind, currents, and stars directly—an embodied knowledge refined through experience, variation, and correction at sea. Over time, these living practices were absorbed into the cartographic systems of empires. The intuitive craft of steering by feel became the geometry of longitude and latitude, fixed routes, and maritime charts. What the sailor once adjusted in motion, the navigator could now calculate in advance. The world that had been known as a set of flows became a grid of positions.

The royal science retained the results of nomadic experimentation—routes, techniques, instruments—but stripped them of their local contingency. It replaced operative knowledge with representative knowledge: a view from above, where every movement could be predicted and coordinated. The “war machine” of the nomadic explorers was thus absorbed into the machinery of the State.

Now that the historic context is set, we’ll look closely at how AI architectures evolved from hydraulic metaphors to solid metaphors, and connect it to what it means for the information environments that we increasingly spend most of our lives in.

The Age of Flows (1980s–2014)

Early neural networks adopted the hydraulic metaphors of the internet. They were designed for streams of text, sound, or images that flowed through networks. Most applications at the time—speech recognition, translation, search—were also sequential by nature. Hardware and memory limits made it easier to handle one piece of data at a time. These constraints made flow-based thinking feel natural.

Two main families defined this era: Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs).

RNNs: temporal streams

Recurrent networks were built to handle sequences—speech, text, or time-series data. They process information one step at a time. At each step the network takes the new input and combines it with a small internal memory of what came before. The current state depends on the new signal and the previous state. The model “remembers” by continually passing information forward. This makes RNNs good at patterns that unfold in order—sentences, melodies, sensor readings—but it also ties them to time itself. Each step must wait for the last, so training is slow and long-range memory is fragile.

Later versions called Long Short-Term Memory (LSTM) added “gates” that decide what to keep or forget. These act like small valves or dams that regulate the current, preventing the gradient from fading away. But even with these controls, the underlying picture remains hydraulic: information flows through a narrow temporal channel.

CNNs: spatial fields of local turbulence

Convolutional networks were created for vision. Instead of moving through time, they move across space. Imagine sliding a small stencil over an image to see what patterns lie beneath—edges, corners, or simple textures. Each stencil, called a filter or kernel, looks at a small region and records how strongly that feature appears. The next layer combines these local readings to recognize larger forms such as eyes, wheels, or letters.

A CNN learns by repeatedly scanning and adjusting its filters until it can detect the right patterns automatically. The process is local and empirical: each decision depends on nearby pixels, just as each stone in a Gothic arch depends on the neighboring stones that press against it. Global structure emerges gradually as the network layers multiply.

Shared logic: learning through flow

Though one moves through time and the other through space, both RNNs and CNNs rely on local causality—each part affects only what lies next to it. They learn through flow: passing activation along, adjusting weights slightly at every step. Like the Gothic masons described by Deleuze and Guattari, they build by feel and feedback, not by abstract plan. Their intelligence is operative, not geometric. It arises from motion and adaptation within a space that is turbulent and uncertain - what Deleuze would call smooth space.

The Transitional Period (2014–2016): When Flows Began to Fold

By the mid-2010s, the flow-based models of the neural age had reached their limits. RNNs could not easily hold long memories, and CNNs struggled to connect distant features within an image. The systems were powerful but myopic—they could see only what was nearby in space or time. Researchers began looking for a way to let information reach across distance, to let one part of a sequence “look back” or “look around” without depending entirely on the slow step-by-step flow.

Additive attention: the first regulator

In 2014, a team led by Dzmitry Bahdanau proposed a new idea for machine translation: attention. The problem was fundamental: in a normal RNN, an entire sentence in one language had to be compressed into a single vector before being decoded into another language. Important details were often lost.

Attention changed that. Instead of squeezing all the information into one state, it let the model assign different levels of importance to different words in the input while generating each word in the output. At every step, the model calculated a set of small weights—numbers that said “focus here more, there less.” It was as if the network had gained the ability to glance backward and pick out what mattered most, rather than being carried helplessly by the current.

Technically, this involved computing a weighted average of all past states, but conceptually it was a small revolution: the system began to measure its own flow. Attention acted like a valve or a surveyor, redirecting energy where it was needed.

Self-attention: a field of relations

A few years later, researchers extended the idea. If an output could attend to its input, perhaps every element in a sequence could attend to every other. This led to self-attention—a method where all the words or tokens in a sentence look at one another directly, comparing and weighting their mutual influence.

Now, instead of a single current flowing forward, the model began forming a web of connections. Each token could sense all the others at once. The sequence, once linear, unfolded into a field of relations—a surface of dependencies that could be scanned and adjusted in parallel.

This was the first true folding of the old smooth space. Attention introduced a plane of coordination, a way to chart forces rather than simply follow them. It remained a supporting tool inside RNNs, but it already carried the logic of a new order. The network was no longer just flowing; it was mapping.

The Geometric Turn (2017): Attention is all you need

In 2017, a paper titled Attention Is All You Need introduced a new architecture called the Transformer. It took the idea of self-attention and made it the entire foundation of the model. The result was a complete break from the old flow-based designs.

Until then, neural networks had worked either step by step (like RNNs) or patch by patch (like CNNs). Both approaches depended on local movement through time or space. The Transformer removed this dependency altogether. It allowed every part of a sequence to connect directly with every other part, all at once. Instead of a stream, it created a field—a two-dimensional map of relationships.

In a Transformer, each word, token, or pixel is turned into a vector—a small bundle of numbers that represent its meaning in context. The model then compares every vector with every other, measuring how closely related they are. These pairwise comparisons form what is called an attention map: a grid that shows which parts of the input matter most to each other.

This step converts language from a line to a plane. Words no longer depend only on what came before; they exist together in a shared space where relations can be calculated instantly. The model does not “remember” in the old sense..

Because all these comparisons can be done at once, the Transformer is also far more efficient to train on modern hardware. GPUs and TPUs excel at matrix multiplication, which is exactly what attention requires. What was once a slow, recursive process becomes a parallel computation that spans the whole dataset.

From flows to fields

Conceptually, the change is as deep as the technical one. The Transformer represents a shift from hydraulics to geometry—from forces moving through a channel to points organized on a surface. The model no longer flows through time; it projects patterns across space.

Where the earlier architectures resembled Gothic builders feeling their way upward through trial and correction, the Transformer resembles a royal architect drawing a complete plan. Attention provides the ruler, compass, and coordinate grid. The model no longer discovers form through motion; it imposes a structure that everything else must follow.

This was the decisive moment when AI moved from sequence to field, from the craftsman’s intuition to the surveyor’s map. It marked the beginning of the solid, spatial metaphors that now define the age of large language models.

I’m over-simplifying things a bit. Neither LLMs nor previous paradigms are purely defined by hydraulic and solid metaphors. Both have a mix of both types of metaphors but I’m focussing on the dominant metaphors. The Deleuzian frame would be that everything hydraulic is in the process of becoming solid and vice-versa.

When the Environment Flips

So to recap, early breakthroughs in artificial intelligence used hydraulic metaphors in their architecture because that was the dominant metaphor of the internet era. The breakthrough came when Attention is all you need ditched flow metaphors entirely and focussed on attention across a field.

So, why does any of this matter to us? I posit that with LLMs crystallizing the digital information environment, we are at the beginning of a flip where our digital lives are more striated, Romanesque and dominated by solid metaphors while in response, meatspace is becoming more smooth, carnivalesque, gothic and dominated by flow metaphors. Theories around the death of the internet, crumbling institutions and the cozyweb make more sense when you consider that all of these are information environments that are in transition from nomadic to royal, smooth to striated or vice versa. To understand this flip, it would be useful to look at Marshall McLuhan’s idea of environment and anti-environment.

In his 1966 essay The Relation of Environment to Anti-Environment, Marshall McLuhan argued that every medium creates a new environment—a total surround that shapes perception without revealing itself. Environments, he wrote, are “imperceptible except in so far as there is an anti-environment constructed to provide a means of direct attention.” We can only notice the water we swim in when something disturbs it. Art, in McLuhan’s view, once performed this role: the painting, the poem, the play were instruments of perception that made the invisible structures of ordinary life visible again.

But McLuhan also warned that when an environment reaches its most intense and complete form, it flips. At the peak of its power, a medium reverses into its opposite. The wheel made roads visible; the machine made nature visible; the circuit made the machine visible. Each new medium consumes the old and turns it into content. When this happens, the old environment hardens into form, and a new anti-environment arises to restore movement and awareness.

This is exactly what has happened with the internet. Born as a fluid, nomadic medium of flows—of packets, hyperlinks, and streams—it has, at scale, reversed into a system of grids and enclosures. The web’s hydraulic metaphors—streams, feeds, pipelines—have given way to solid ones: models, layers, weights, parameters. The environment of flow has become crystalline. What was once a smooth, open field now behaves like a royal architecture: measured, centralized, supervised. We live inside algorithmic cathedrals built from data rather than stone.

And as always, a new anti-environment emerges. The counter-force to this digital order now appears in meatspace, the physical and social world that the network once seemed to transcend. Real life has become the site of turbulence again—the place where the smooth reasserts itself. Political volatility, spontaneous swarm like movements, and even seemingly random acts of violence are part of this counter-current. They are not outside the system but its reflection: the Gothic energies returning against the Roman plan. Some recent examples come to mind. NYC Mayor-elect Zohran Mamdani’s campaign used fonts and color palettes that evoked a distinct, hand-painted aesthetic; grainy, analog-looking images—whether shot on film or digitally degraded—have dominated brand campaigns and Instagram feeds in the last few years. Perhaps the biggest example is that the U.S. government itself functions as a kind of “nomadic war machine”, improvising policy and intervention at odds with the formal, procedural “royal science” embodied in its laws and courts.

Higher education offers another striking case. The university was one of the purest expressions of royal science: a hierarchy of disciplines, credentialing rituals, and peer-review processes designed to fix knowledge into stable forms. It was already loosened by the Internet’s hydraulic forces—open-courseware, Wikipedia, MOOCs—but the arrival of large language models intensifies the disruption. Now a student can summon the archive directly, bypassing the institution’s linear sequence of lectures and degrees. In a sense, one royal science is undermining another: the LLM, a perfectly geometric engine of representation, erodes the older geometric order of academia.

The internet, in its maturity, has become a royal science of attention—precise, geometric, self-referential. Its anti-environment is forming in the streets, in cozyweb group chats, in unpredictable swarms where emotion and immediacy overtake design. Just as the Gothic masons worked by feel against the strict geometry of the Church, these emergent reactionary movements operate against the order of the new royal science. The environment has flipped, and with it, our sense of where the nomadic resides.

***

h/t

and for conversations that helped this piece.

Perhaps this non-regimented meat space is where No Kings lives, not as a violent opponent, but as a carnivalesque alternative to the abstract plane.

By the way, you are also describing the classical-baroque-rococo-classical cycle or even the tension between analytical and romantic modes. Gothic architecture is at its heart organic mimesis.